Paper Summary: Practical Byzantine Fault Tolerance(PBFT)

In this post, I will explain the consensus algorithm called Practical Byzantine Fault Tolerance(PBFT). PBFT is a Byzantine fault tolerance protocol for state machine replication. The state machine replication is a method for avoiding the unavailability of the online services due to failures by duplicating the system states over multiple machines. Due to the system replication nature, the system needs to keep all its states in sync by using the PBFT consensus algorithm.

PBFT tolerates Byzantine faults in an asynchronous network, hence practical. The byzantine behaviors such as software errors and malicious attacks caused serious damage to online services. PBFT assumes an asynchronous network model for its safety, a Byzantine failure model where a limited number of nodes can behave arbitrarily, and uses the view-change protocol to guarantee its liveness property. PBFT is used in the Hyperledger fabric protocol and works in an asynchronous environment like the internet.

A client sends a signed request to the primary node of PBFT and should hear back directly from at least F+1 backups where F is the number of faulty nodes. If the client does not hear back soon enough, then the client broadcasts directly to backups and keeps sending its request until the request is replicated, hence weak synchrony. The primary should set an order for every request made by the client. The protocol assumes valid signatures on all replicas and has some bounds on response times to ensure liveness is still possible in the case that responses are delayed arbitrarily.

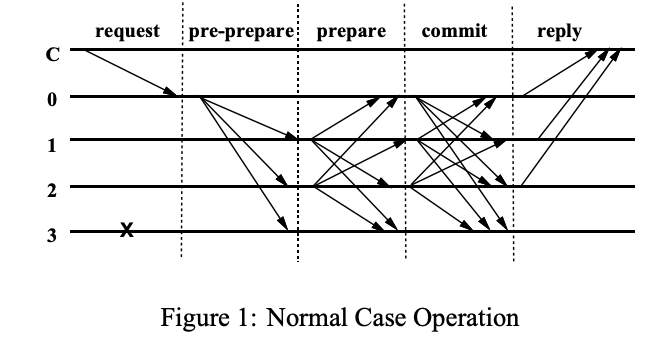

The above figure shows that there are three phases of communication in the normal case of PBFT: pre-prepare, prepare, and commit. Primary multicasts request to all backup nodes. Ordering is set by a single primary if it has not accepted a pre-prepare message for the current view and sequence number before. The outcome of pre-prepare is that backups know they need to communicate with each other to see if enough of them have an agreement. Each backup multicast the prepare message to all other replicas, and adds both pre-prepare and prepare messages to the log. Commit makes order stable across views. A replica (including primary) multicasts a commit message that is signed by backups when prepared becomes true. Replicas accept a commit message and insert it in the log provided everything matches up.

PBFT implements a few cryptographic techniques such as Public key signature, message authentication code(MAC), and digest message. The adversary is computational bound(a faulty node cannot subvert cryptographic techniques) and cannot delay messages forever. The MAC is used to sign all messages to improve performance, but a view-change and new-view messages are signed by a digital signature. Clients are more or less trusted and can suggest any operation with the caveat that agents must agree, so a malicious client can still do damage but it is consistent damage.

Safety is independent of network failure and does not rely on synchrony. That is, even if the network is severely partitioned, namely more than F nodes are isolated (but across all partitions, there are still fewer than F node failures), the protocol is still safe. However, liveness is only guaranteed with fewer than F total failures, i.e. counting both node and network failures. This means at least 2F+1 nodes must be reachable.

View-change is a complex process and only triggered by non-faulty nodes whose timers expire. View-change means a normal node whose timer has not expired, will not join the protocol unless it has seen that f+1 others have. Once enough nodes voted for view change, the new leader must decide on the latest checkpoint and ensure among other things that non-faulty nodes are caught up with the latest states. When the new primary receives 2f+ 1 view-change messages, it multicasts a New-view message. Upon receiving this New-view message, replicas enter view(v+ 1) and re-execute the normal-case protocol.

During view change, no requests are processed. View change effectively gives a new node the power of assigning a sequence number to requests. It must then ensure that the new leader does not use old sequence numbers for new requests, either deliberately or gamed into it by faulty nodes. The safety property of PBFT states that the algorithm provides safety if all non-faulty replicas agree on the sequence numbers of requests that commit locally.

Garbage collection discards messages from the log after replicas agreed that at least F+1 non-faulty replicas have executed the operation and have proof of a stable checkpoint. The checkpoint is used to recover another replica or use in the view-change protocol. checkpoint is a snapshot-like algorithm. At some event trigger (like sequence number = 0 mod 100) all replicas issue a checkpoint message and send it to all. Replicas collect these checkpoint messages in their logs when each replica has 2f+1 checkpoint messages. Replica sends out a view-change message that contains a new view number, and a proof of the last stable checkpoint it knows about. Also, includes the leftover state of prepared messages that are not committed. These leftovers need a bunch of nodes 2F+1 to independently decide to commit the value.

The view-change procedure ensures progress by allowing replicas to change the leader so as to not wait indefinitely for a faulty primary. Each backup starts a timer when it receives a request and stops it after the request has been executed. Expired timers cause the backup to suspect the leader and request a view-change. It then stops receiving normal-case messages, and multicasts view-change, view(v+1), sequence number, s, Client, Primary, signed by the sender, reporting the sequence number of the last stable checkpoints, its proof of correctness C, and the set of messages P with sequence numbers greater than n that back up i prepared since then.

In terms of performance, the benchmarks run Andrew benchmark with a single client which means that each operation does full RTT before the next is issued(no pipeline). This makes the resources of the server underutilized and the overhead of crypto is not included.