A review of Narwhal and Tusk: A DAG-based mempool and efficient BFT consensus.

This is a summary of the paper and the video presentation.

The paper proposes a Narwhal, A DAG-based mempool protocol. The Narwhal idea is to separate the dissemination and ordering transactions in blockchain system for high performance. The protocol provides the reliability of disseminating transactions and provides the storage for causal history of transactions.

The Narwhal alone is not enough for blockchain consensus protocol to guarantee liveness property under asynchronous network model. Thus, the paper proposes Tusk, a zero-message overhead protocol for maintaining Narwhal’s liveness. Both Narwhal and Tusk protocol create a BFT protocol to reach consensus on blocks metadata.

Narwhal off-loads reliable transactions dissemination to the mempool protocol and relies on consensus to sequence a very small amount of metadata.

The protocol assumes N nodes with up to f < N/3 byzantine nodes. The paper assumes a asynchronous eventually reliable communication link. Narwhal provides key-value block store abstraction to read and write transactions with partial orders on the block. The Narwhal mempool uses a restricted DAG called round-based DAG structure.

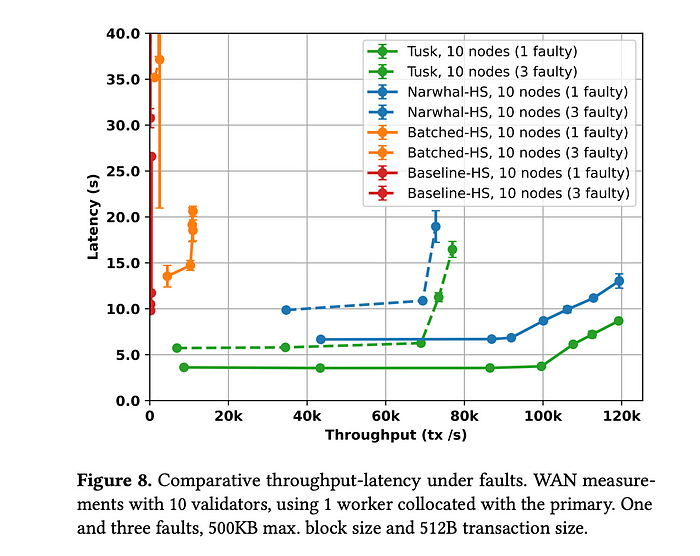

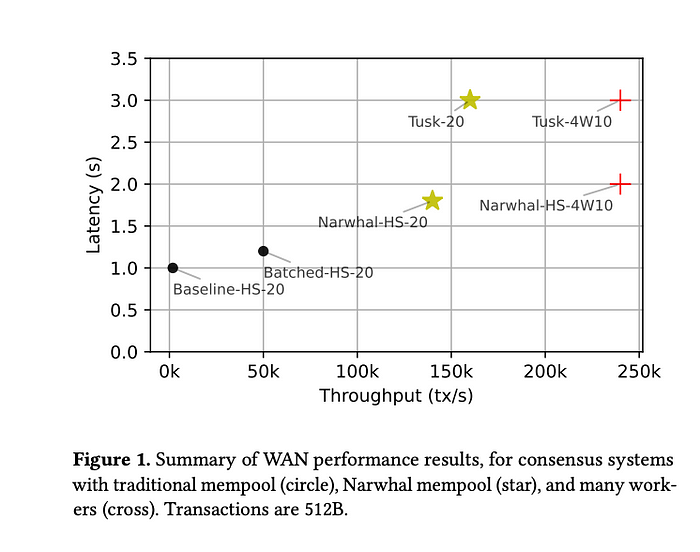

Before I dive deep into the paper, the overview of evaluation results show that Narwhal-HotStuff archives 130K tx/sec with 2 second latency instead of 1800 tx/sec with one second latency in HotStuff. Tusk achieves 160K tx/s with 3 sec latency. Under failure, Narwhal-HotStuff suffers from high latency.

The paper have evaluated three types. The baseline-HS means that the proposer broadcasts the blocks, while Batched-HS means that the proposer broadcast the metadata of the blocks and each validator broadcasts the blocks to the mempool. This is only working under ideal network conditions and archives 50x better baseline results. The Narwhal-HS is a DAG-based, structured Mempool that provides more robust solution and represents the design behind the paper. The Narwhal-HS uses Tusk for asynchronous network that enables each node to examine its local DAG without sending any messages by using a random coin.

The block in Narwhal includes more than a usual block. First, it has a set of transactions. Second, it contains a list of references to previous blocks. The digest of 𝑏 is 𝑑 that used as an identifier to reference the block. Third, the 𝑏 also has a reference encodes a causal happened-before relation between the blocks.

The Narwhal mempool satisfies integrity(Honest nodes return same value), block-availability(write operation returns a certificate of availability which is 2f+1 signed vote and included in the next round), containment(Read Causal operation returns B and B’ where B’⊆B), 2/3-causality(node should receives 2/3 of blocks before block b), and 1/2-chain quality(half of the blocks in B was return by honest nodes). Integrity and block-availability properties provide a separation of dissemination of blocks and consensus. The containment and causality achieve high throughput because of happen-before relationship. The chain quality provides the censorship resistance.

Remember that the design goals of Narwhal are to reduce double transaction when leaders propose blocks and enable scaling out when more sources are available.

Narwhal follows a simple primary-worker architecture. Clients send transactions to validators’ workers. Upon receiving, validators’ workers accumulate them into a batches and broadcast it to every other workers that belong to same validator. Upon receiving 2f+1 acks, workers digest the batches and send it to the validator. The validator maintains the local round and do other things: Receive digest of batches from its workers and accumulate them into the block’s metadata. They also receive certificates of availability for batches at round 𝑟 and accumulate them into a certificate list. In the next round, each validator receives 2f+1 certificates of availability(r-1).

The validation conditions for the reliable broadcast at other validators are also adapted to ensure availability: a primary only signs a block if the batches included have been stored by its own workers. This ensures, by induction, that all data referred to by a certificate of availability can be retrieved.

The Narwhal Mempool uses a BFT version of Threshold clocks as a pacemaker to advance rounds. The paper adapts HotStuff protocol on top of Narwhal called Narwhal-HotStuff that proposes one or more certificates of availability created in Narwhal. Upon commit, the full uncommitted causal history of the certificates is deterministically ordered and committed.

In the Narwhal implementation each validator broadcasts a block for each round 𝑟. If 2𝑓 + 1 validators receive a block, they acknowledge it with a signature. 2𝑓 + 1 such signatures form a certificate of availability, that is then shared, and potentially included in blocks at round 𝑟 + 1. In r+1, certificate of availability must be there to ensure totality property. In any round, certificate of availability should be ensure to guarantee totality.

Workers keep broadcasting the batch of transactions which is not more than 512KB. Then, the primary broadcasts the hash of the block which is 32B.

To remain live under asynchronous or DDoS attacks, Tusk was proposed in the paper. Tusk implies random coin that constructed from an adaptively secure threshold scheme. Tusk gets the causally ordered DAG constructed Narwhal and totally orders its blocks with zero extra communication. Tusk validator operates a Narwhal mempool. Includes in each block information about a random coin.

Every validator interprets the DAG based on its view. It divide the DAG in waves each of which consists of 3 consecutive rounds. Propose, vote, and produce a randomness to elect a leader.

In Tusk algorithm, every validator locally interpret its local view of the DAG and use the shared randomness to determine the total order. Tusk divides DAG into waves, three rounds per a committed block. The first round of a wave each validator proposes its block (and consequently all its causal history); in the second round each validator votes on the proposals by including them in their block; and in the third round validators produce randomness to elect one random leader’s block in retrospect.

To guarantee that all honest validators eventually order the same block leaders, after committing the leader in a wave 𝑤 , 𝑣 sets it to be the next candidate to be ordered and recursively go back to the last wave 𝑤 ′ in which it committed a leader.

The main features of Narwhal are scaling out and ensuring both availability and integrity under asynchronous network. The Narwhal can scale number of workers for large number per validator. The Tusk features are DOS resilient, no message overhead, and high message throughput.

Experiment parameters are 4 validators each running with a single worker, a batch size of 500KB, a block size of 1KB, a transaction size of 512B, and one benchmark client per worker submitting transactions at a fixed rate for a duration of 5 minutes. Then, vary the baseline parameters through experiments to illustrate the impact on performance.