A paper review: The design, architecture, and performance of the Tendermint Blockchain Network

This is a summary of this paper and a video presentation of the paper.

Tendermint is the consensus engine of Cosmos. The objective of the paper is to study Tendermint’s design, architecture, and evaluated Tendermint system in a realistic environment.

Tendermint supports a general-purpose blockchain applications in any languages and allows open and close memberships. Statistically, there are 200 projects using Cosmos and Tendermint.

Tendermint is a variant of PBFT and uses slashing style of proof-of-states, which was introduced in Casper. Tendermint uses gossip layer due to its open membership features. Gossip layer helps network to stay connected and recovered swiftly from crash node quickly.

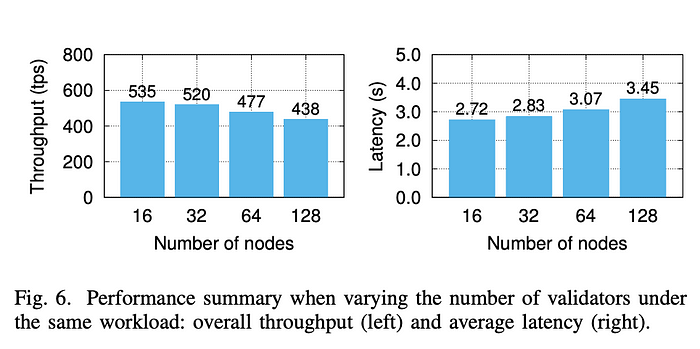

Tendermint or most of replicated systems has a degree of degradation with network size increases. For example, in Tendermint 8x increase in the network size leads to 18% reduction in throughput.

Tendermint designs goals are: deployment flexibility in many administrative domains where each domain has the right to choose its membership types(open/close), scalability where there is a strategies to avoid performance degradation, BFT in hostile environment, language independent where application written in many languages, and light client with resource-constrained nodes.

Figure below shows the Tendermint’s architecture.

The following are the main modules in the Tendermint core architecture.

1- Mempool is a protocol for receiving, validating, storeing, and broadcasting transactions submitted by clients. All transactions received by a node are sent to application to be validated(mempool ABCI connection). Once transactions are validated, nodes append transactions to mempool. Then, nodes remove transactions from mempool after consensus reached. Mempool locks when transactions are executed.

2- Consensus algorithm where all nodes communicate in phases to collect a quorum votes in each phase. If the leader is Byzantine, it will be skipped by the rotating function.

3- Validator Set: Each block in blockchain committed by a set of valid validators. These validators have voting power and cryptographic techniques. The validators can change from block to another by using special application commands. The change is stored in blockchain.

4- Gossip: it is a separate module in Cosmos. Every honest peer sends meaningful messages with current round that can help to reach consensus.

5- Evidence: a module to identify and punish the byzantine nodes. Tendermint misbehavings are two things: nodes publish blocks to clients that were not committed; validator casts votes for more than one block in same round/height. When misbehaving happens, light client or Tendermint nodes report that to evidence module. The evidence module collect the misbehaving and its proof then place it in the evidence pool. The evidence gossips from pool to the rest of network. Nodes verify the misbehaving report and forward it to the peers. When a block is committed, the evidence of misbehaviors are included in the block and delivered to the application. The typical approach that adopted in Cosmos in-production blockchains is to slash the fundings (stake) bound by that validator, while removing it from the validators set.

6- Fast Sync is a protocol for exchanging committed blocks. Mostly, it is used when node rejoin/join the network. Technically, a client node in the Fast Sync protocol periodically broadcasts StatusRequest messages to all peers. A node, regardless of its role, responds with a StatusResponse message. Based on this information, a client node selects peers to which it sends BlockRequest messages, referring to a specific height.

7- State Sync is a protocol allows nodes to join the network by using application level snapshots. The protocol is an alternative to joining consensus at the genesis state and replaying all historical blocks until the node catches up with its peers. The protocol is initiated by a node in client mode that sends snapshot request messages to each new peer. Upon receiving a snapshot request message, a node in server mode contacts the application, namely the instance of the replicated application it hosts, to retrieve a list of available snapshots.

The application should return a list of snapshot descriptors that are sent back to the peer. Upon receiving a snapshot response from a peer, a node in client mode adds the reported snapshot descriptor to a pool of candidate snapshots. Once a client node has a candidate snapshot, it sends its descriptor to the application, which may accept or reject the snapshot. Once a full snapshot is retrieved and installed by the application, the node will be at height of the blockchain when the snapshot was taken, and can switch to the Fast Sync or to the consensus protocol.

ABCI is the interface that has four ABCI connections with the replicated application: The consensus connection, mempool connection, snapshot connection and query connection.

The experiments had done in 16 AWS regions in all continents and all clients collocate in single server. Clients submitted 1 KB transactions to the validators evenly (12 clients per each validator) and wait until the transaction includes in a block committed by Tendermint. Non-validator nodes are either seed node that provides a node with a list of peers which a node can connect to or learner node that delivers blocks and measure performance.

The mempool can store up to 5000 transactions, with maximum byte size of 1 GB, and the block size is 20 MB. Both connection’s maximum send and receive rates are 5000 KB/s, and the intervals that govern gossip are 100ms.

Figure 2 depicts the number of neighbors a node has in the overlay network and the distance between two nodes in the overlay network. The cumulative distributions are represented by the green lines, while the bars represents the portion of values in each x-axis interval.

Figure 3 presents the distribution of measured latencies between the 16 AWS regions hosting validator nodes (left) and the approximate distribution of latencies between nodes (right).

The time required for committing a block of transactions the time it takes for a client transaction to be ordered.

It takes a block on average 2.53s to commit, with 96% of them between 2.3s and 2.7s. The latencies of submitted transactions present more variability, 3.45 ± 0.99s on average, concentrated in two intervals. About 40% of the latencies were between 2s and 3s, in line with the typical block latency, while a similar portion were around 4.5s, which is closer to the average duration of two blocks.

Figure 5 presents the throughput. On the left, the graph presents the throughput when every block is delivered (line with points) with variations between blocks and every two blocks (green line). The overall throughput of 438 transactions per second (tps). On the right of Figure 5, the graph presents the distribution of the number of transactions included in each block.

The performance of Tendermint at scale, with 16, 32, 64, and 128 validators is not increasing as depicted in the figure below.

After killing 42 validators, the clients assigned to crashed validators. After a timeout, the requests re-routed to another validator chosen at random. It took around 6s for nodes to detect the failure of all peers.

The overall throughput is severely impacted: 164 tps, 63% lower than in the fault-free experiments (438 tps). The average latency was 9.17 ± 8.13s, 2.7x higher than in the baseline experiments (3.45 ± 0.99s). In fact, almost 30% of transactions presented latencies above 10s, and about 12% above 20s.